Schedule a Site Audit for the primary domain in any rank tracking campaign to determine if there are content or technical issues that may be affecting your SEO, site performance and visitor satisfaction.

Campaign Settings > Site Audit

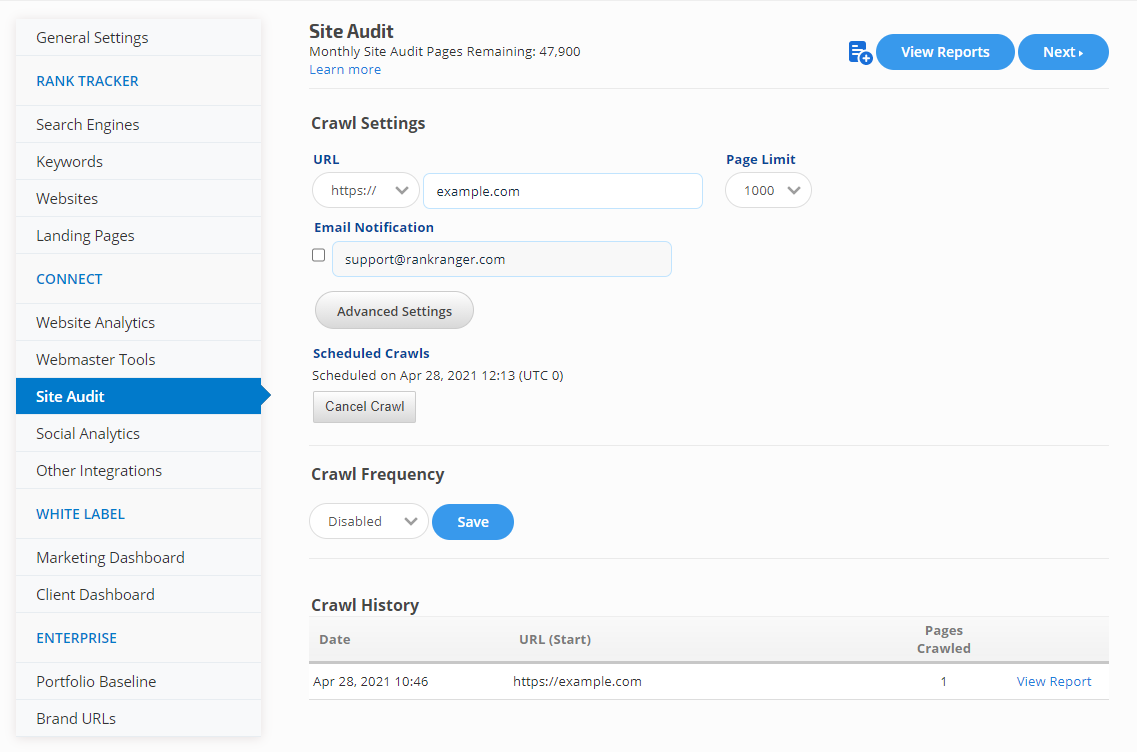

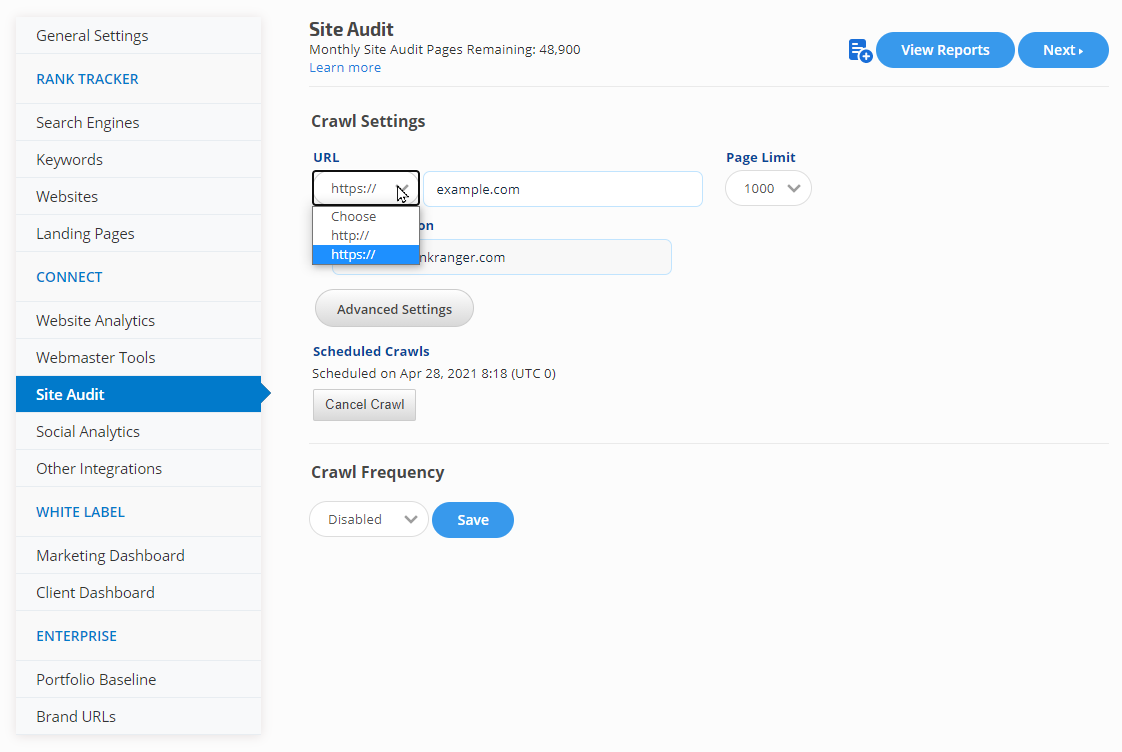

Campaign Settings: Site Audit Screen

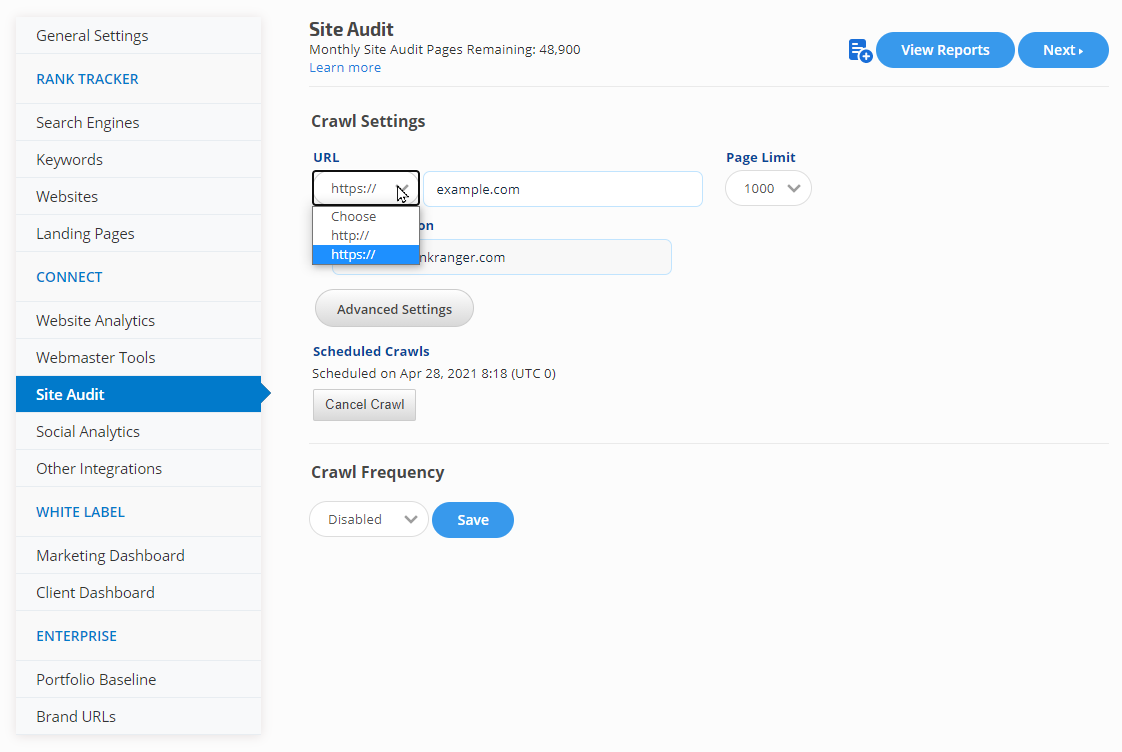

- URL type: select http or https

- Domain: must match the campaign's primary domain (from the General Settings screen). If the site pages are located in the "www" version without a redirect from the non "www" version,, then make sure to include that in the domain field. You can specify the crawl to be for a specific sub-domain or sub-directory, e.g., example.com/blog/

- Page Limit: select the number of pages the crawl should return audit data for. Your package has a monthly page limit that should be taken into consideration when scheduling a site audit.

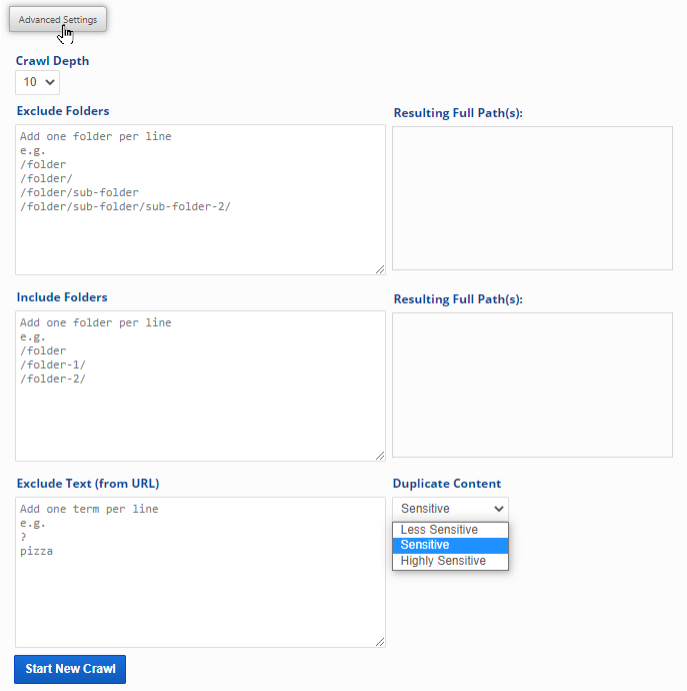

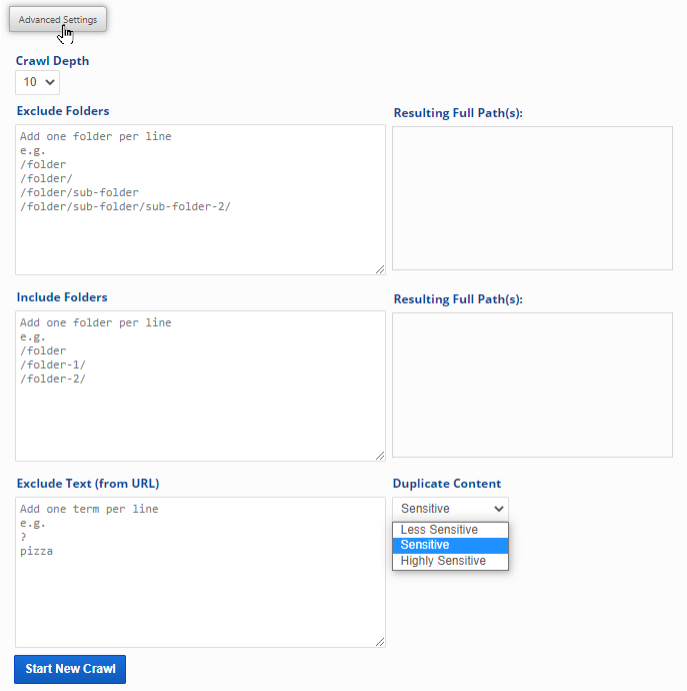

Advanced Settings

- Crawl Depth: the number of clicks (from the domain URL entered) that it would take a user to reach the pages that you want the audit to include

- Email Notification: check the box if you want to receive an email message when the site audit has been completed. The default address is that of the account owner, however, when you select the option you are then able to enter the email address that you want the message sent to. If you select this option, please be sure to white list [email protected]

- Exclude Folders: if you want specific site folders excluded from the crawl, enter one folder address per line without the domain name, e.g., to exclude https://www.example.com/shop/categories and https://www.example.com/company-info, enter:

/shop/categories

/company-info

- Include Folders: If you want the crawl of specific folders, enter one folder address per line without the domain name

- Exclude Text (from URL): if you want to exclude URLs that contain certain words or phrases from the crawl, enter them one per line

- Duplicate Content: Select the sensitivity level for the duplicate content of your new crawl. The options available are: Less Sensitive, Sensitive and Highly Sensitive.

- Click the Start New Crawl button

After clicking the Start New Crawl button, the

settings selected and filters added in Advanced Settings are saved for future crawls until you change them.

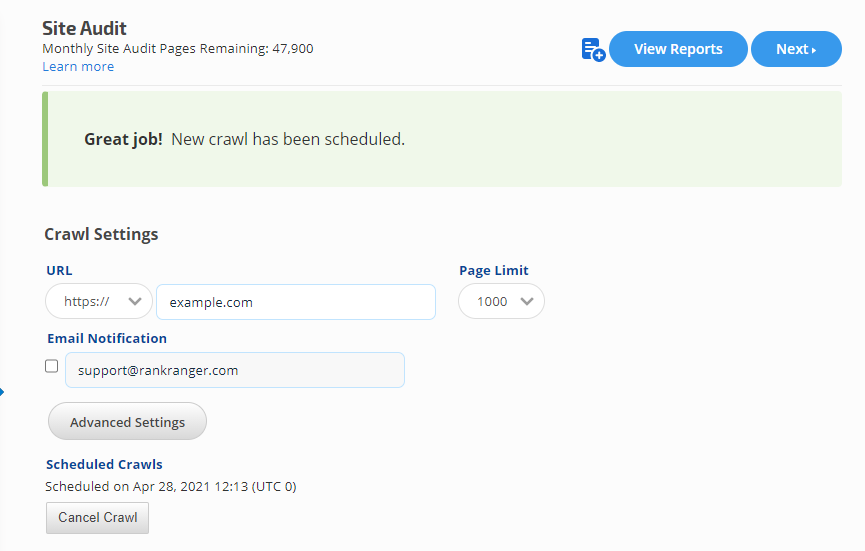

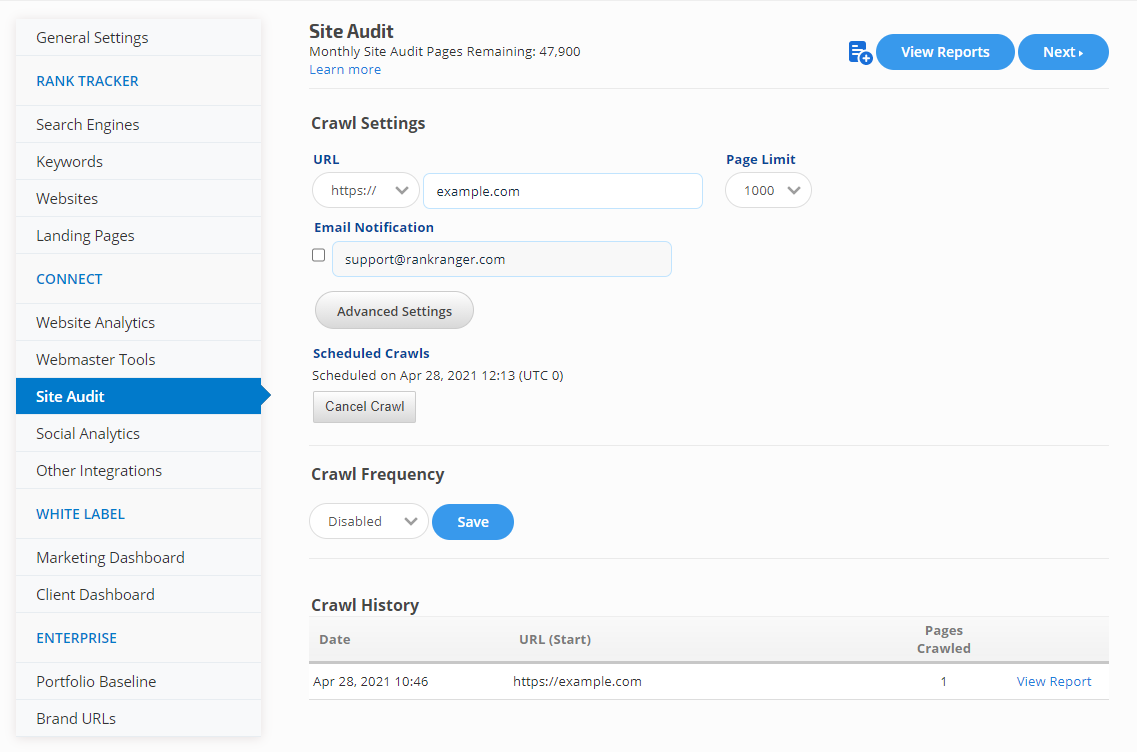

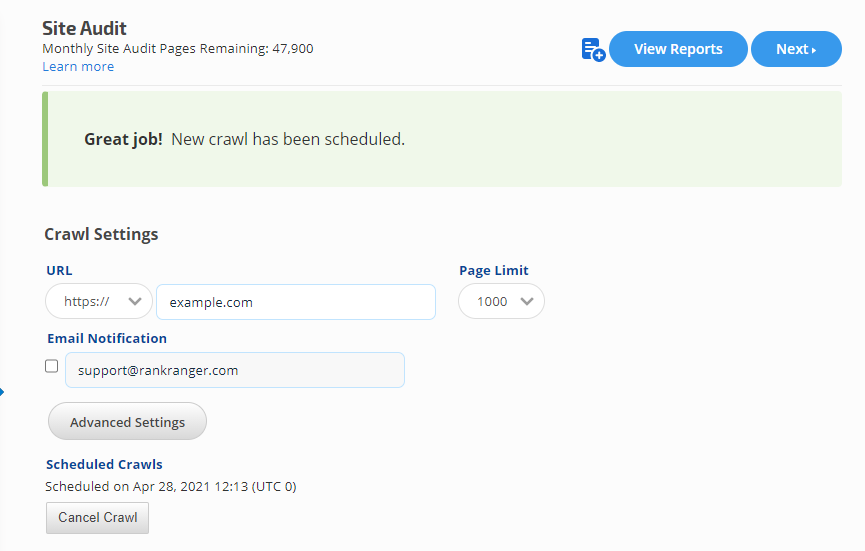

Crawl Successfully Scheduled

After clicking the Start New Crawl button, you should see a success message and a new Scheduled Crawls section that provides the date and time the crawl was requested. It can take approximately 3 hours for a crawl to be completed, depending upon the number of pages and crawl depth requested.

If you accidentally schedule a crawl (e.g., you realize that you want different settings) and want to stop it, click the Cancel Crawl button.

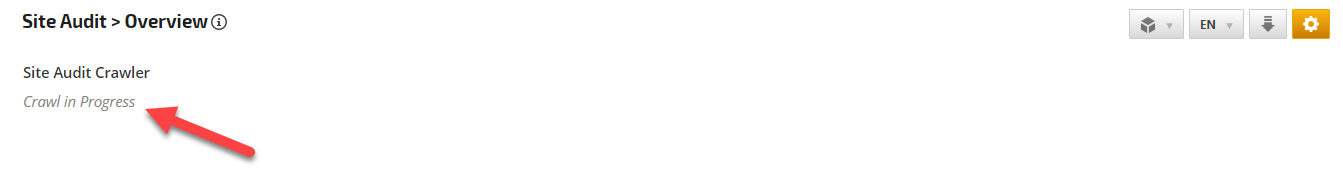

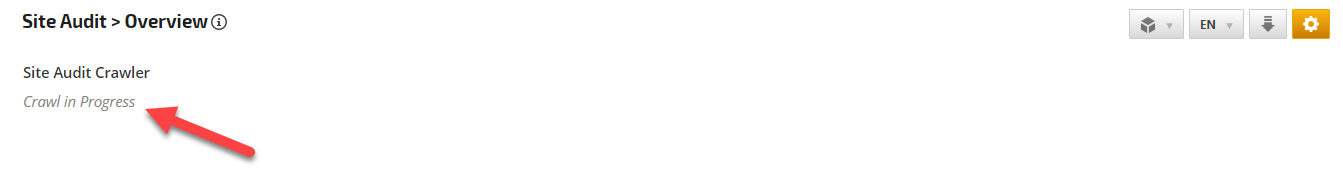

Crawl in Progress

If you attempt to view Site Audit reports before a crawl has completed, the report displays a "Crawl in Progress" message.

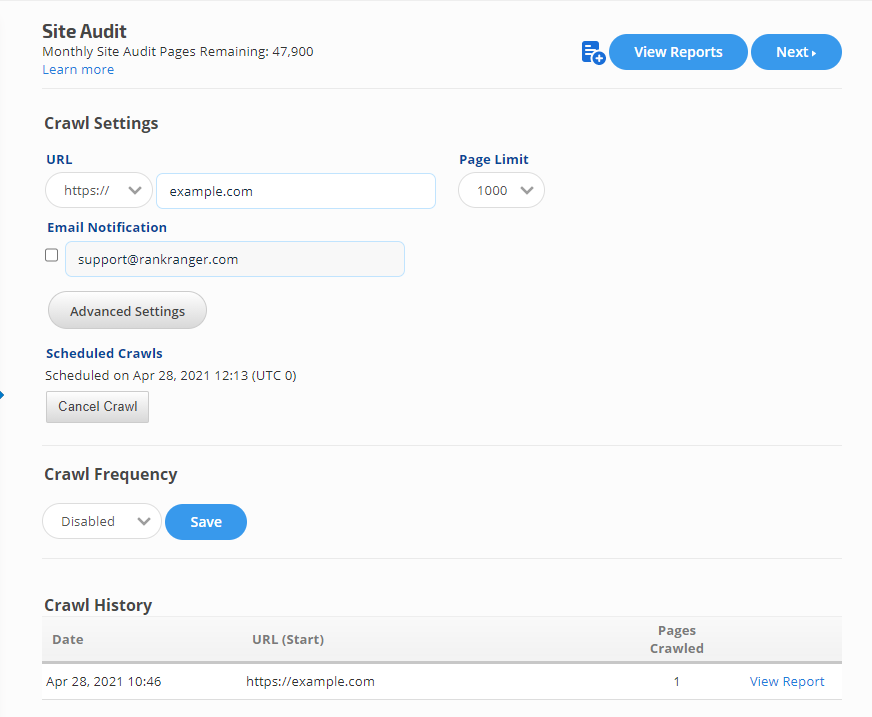

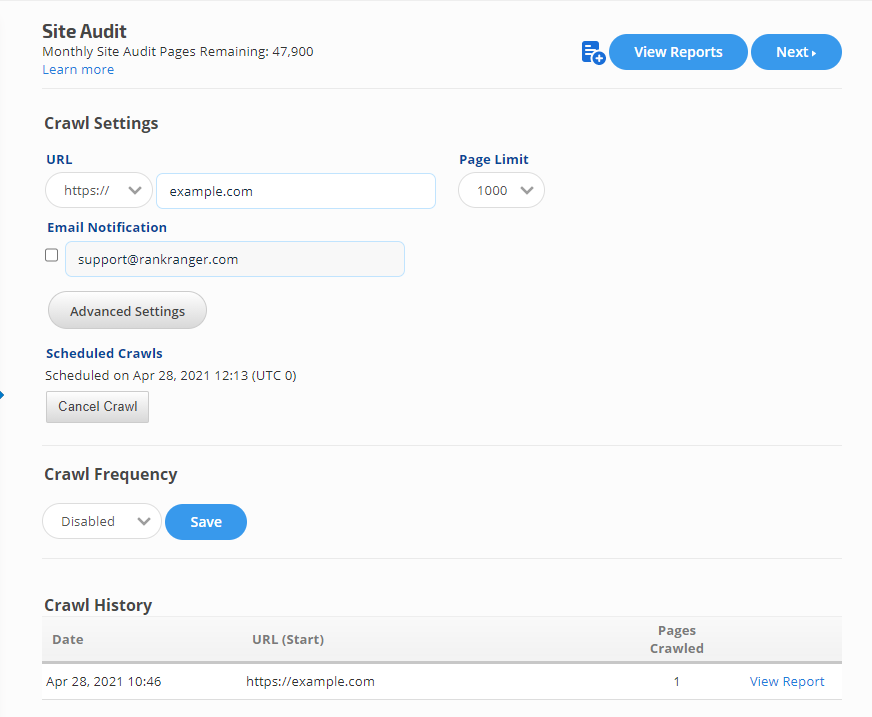

Crawl History

When the Site Audit has been completed, you'll find the date, starting URL and number of pages crawled in the Crawl History section of the Campaign Settings > Site Audit screen. You can click the "View Report" link to access the Site Audit Overview report.

In the above example, the Site Audit only returned 1 page for a request of 1000 pages. This was due to the entry of the domain without a "www" because the tested site does not have a redirect from the https://example.com to the https://www.example.com version.

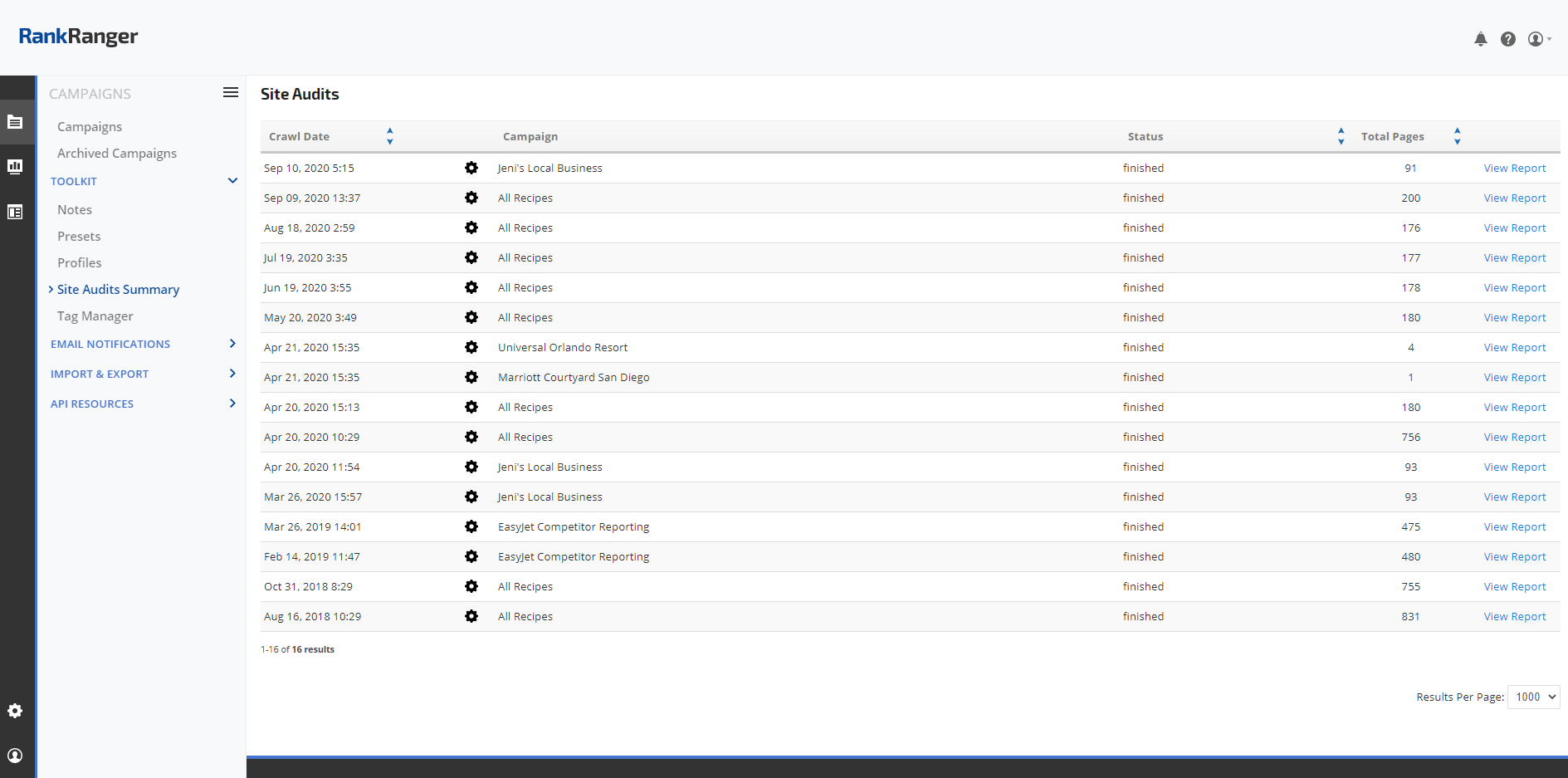

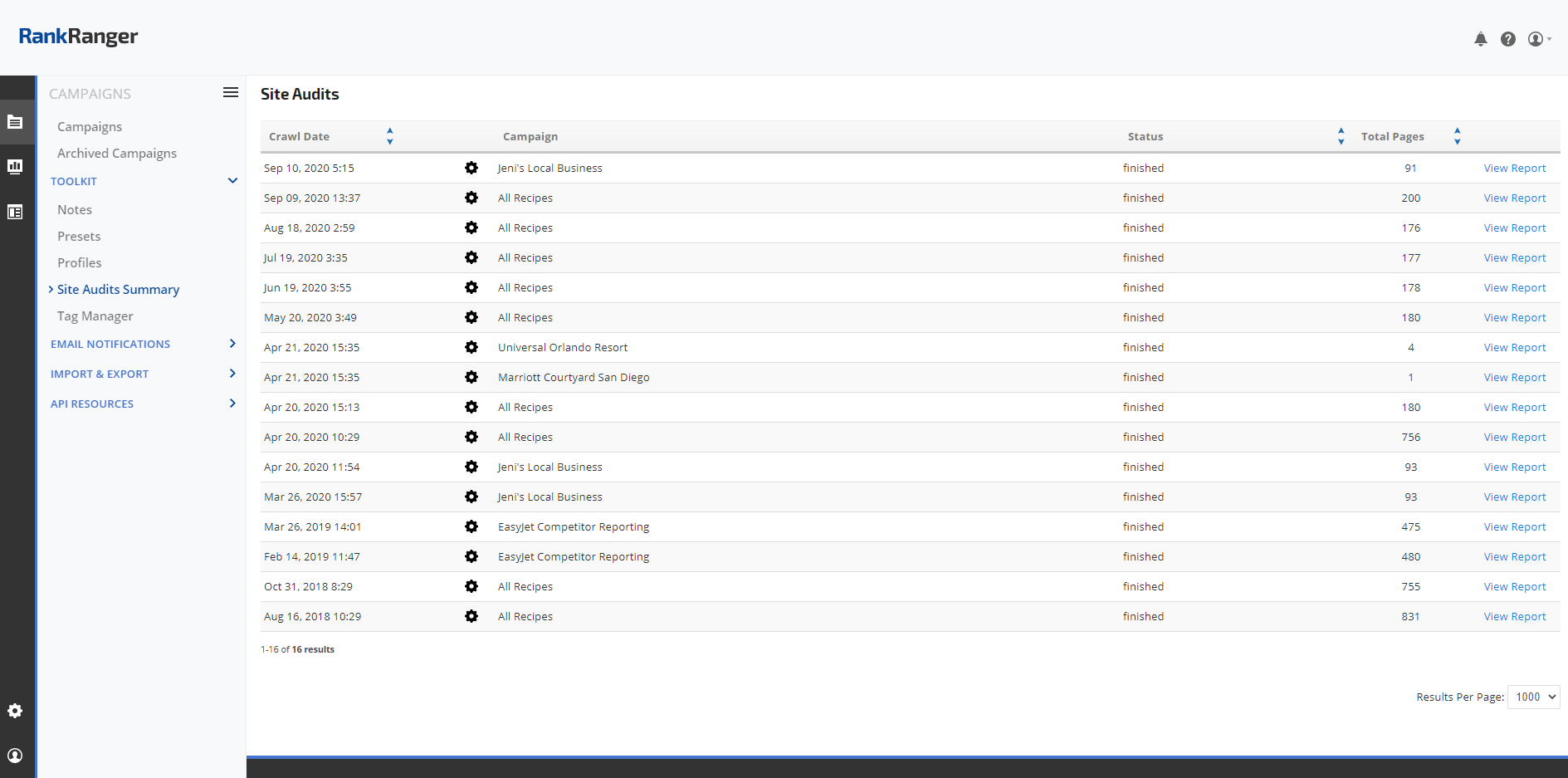

Account-wide Site Audits Summary

To view a list of all site audits run in your account, open the

Campaigns > Toolkit > Site Audits Summary screen. The summary consists of the Crawl Date, Crawl Settings, Campaign, Status, Number of pages crawled and the link to the Site Audit Overview Report.

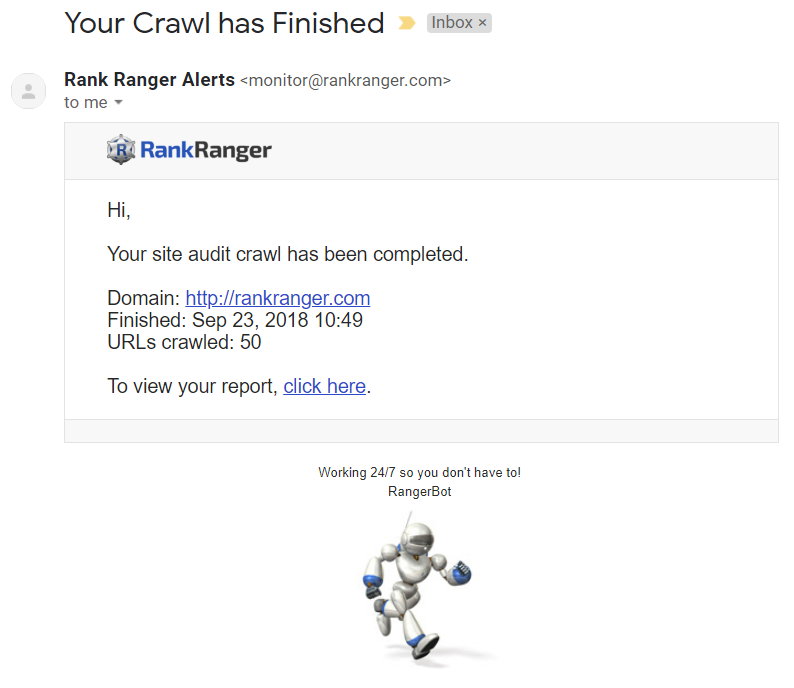

If you select the email notification option in the Advanced Settings prior to scheduling a new crawl, you will receive an email that looks similar to this when the site audit has completed. If you do not see the message within 3 hours, please check your spam/junk mail box and when you locate the message set your email client to whitelist all email from rankranger.com

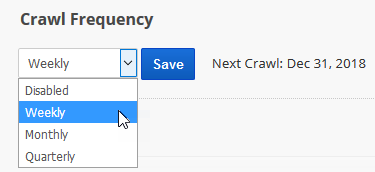

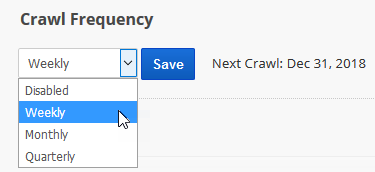

Crawl Frequency

After the first crawl has been completed, it is possible to schedule a crawl (with the same settings as the most recent crawl) to repeat weekly or monthly.